Top 3 Challenges to Securing Vendor Remote Access

Shifts Driving the Need for A Modified Approach to Secure Cloud Access

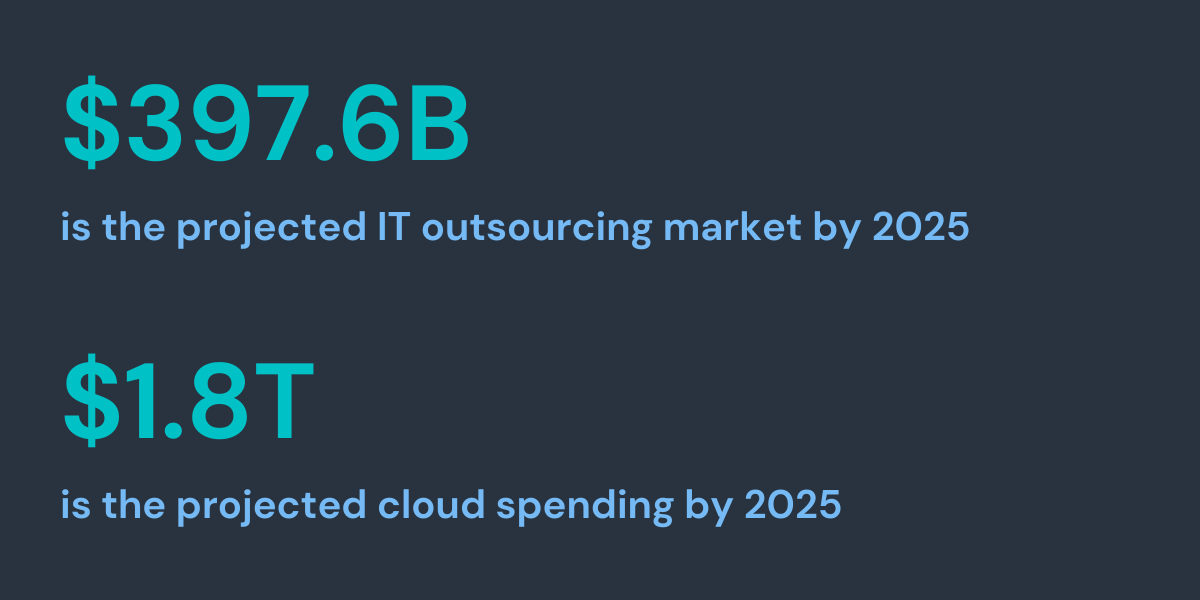

The global IT outsourcing market is projected to reach $397.6 billion by 2025. During that same time cloud spending is projected to reach $1.8 trillion (according to Gartner). Another shift resulting from these trends is the need for vendors and third parties to access a wide swath of critical resources within the organization. These resources include applications, servers, infrastructure, intellectual property and sensitive data. This change in approach requires a re-evaluation of the way companies allow vendors, contractors, and third parties access these resources.

If infrastructure were a monolith, addressing this problem might be simpler. But, with a cloud- dominated infrastructure, enterprises must

work with the existing applications deployed on hardware, private clouds and any of the public cloud platforms. They must also deal with the rapid adoption of SaaS applications.

This means that the access mechanism granted to vendors, contractors and third parties must secure access across all these permutations

Another consideration for organizations that leverage contractors, vendors and third parties is the lack of control over the devices used to access their infrastructure.

Some organizations overcome this by shipping devices to the users. Others deploy tools to manage the devices used by the employees of the vendor or third party. These approaches are associated with high costs and operational complexity.

Are Challenges Being Addressed Effectively?

Almost every company that allows vendors, third parties and contractors access to critical resources use some mechanism to secure access. They rely on mechanisms (sending devices or managing devices) to address device risk. Tools like VPNs and VDI are used to control network risk. Manual approaches are also employed to address risk of mismatched identity between applications. And they use some coarse mechanisms of role-based access control to manage risk associated with data access.

The most common tools used are VPNs, VDI and Zero-Trust Network Access (ZTNA). Since these solutions were not built for a cloud-centric world, they are riddled with problems. Some of these shortcomings are:

1. Access that does not conform to principles of least privilege access

The solutions listed above were built for a network- centric approach and not a cloud-centric world. The granularity of access built into these solutions is overly permissive and not capable of restricting access to specific resources or applications. This wide-open access creates security gaps. For example, they do not have the ability to grant application-specific access.

Enforcing principles of least privilege access is a core tenet of zero trust. Since legacy solutions cannot provide the granular access control needed in a cloud context, they cannot be considered zero trust solutions. In addition to the wide-open access, they are not built to continuously monitor access and tune it to limit or eliminate permission abuse.

These solutions work on the principle of validating access when the initial connectivity is established between the user and the resource. This coarse level of control does not account for what happens after the initial connectivity is established. Nor does it consider the context of the specific access request.

2. Operational complexity

Most of the applications listed above require deployment of onerous agents on the endpoints. Some require the devices owned by the contractor or third party to be managed by the enterprise. This adds cost and operational overhead to understaffed IT and security organizations.

Without the ability to manage the devices directly, organizations lack the visibility into all the access activities that originate on the device. This lack of visibility blinds them to any malicious access. Furthermore, traffic between the endpoint and the resources might be encrypted, which limits the organization’s ability to determine the nature of the access. Often, organizations deploy intrusive and complex decryption mechanisms to examine traffic. These methods introduce cost and complexity.

3. Lack of data loss protection and prevention

Traditional solutions operated, for the most part, in a binary mode. Access or no access. The levers to control and manage access to data were not built into those legacy solutions. Nor were they designed to prevent the movement of malware from the end point into the enterprise infrastructure. These are the key reasons that legacy solutions cannot prevent the loss of data. In fact, it is the very nature of these legacy technologies that is often leveraged to obfuscate mechanisms and gain access to critical resources and infrastructure.